A Mixed Quantization Approach for Data-Free Quantization of LLMs

2025-02-23· ,,,,·

1 min read

,,,,·

1 min read

Justin Zhang

Yanbin Liu

Weihua Li

Xiaodan Wang

Quan Bai

Image credit: scitepress

Image credit: scitepressAbstract

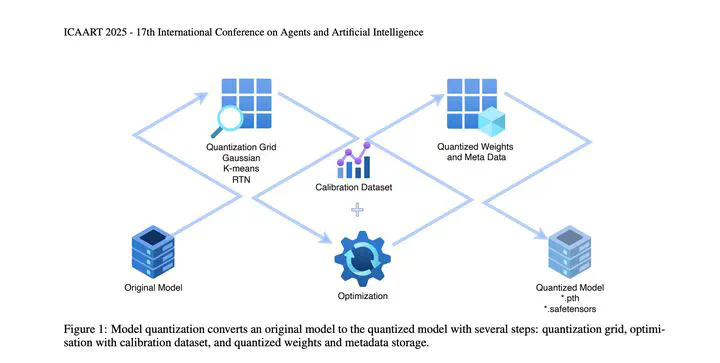

Large Language Models (LLMs) have demonstrated significant capabilities in intelligent activities such as natural language comprehension, content generation, and knowledge retrieval. However, training and deploying these models require substantial computation resources, setting up a significant barrier for developing AI applications and conducting research. Various model compression techniques have been developed to address the demanding computational resource issue. Nonetheless, there has been limited exploration into high-level quantization strategy to offer better flexibility of balancing the trade-off between memory usage and accuracy. We propose an effective mixed-quantization method named MXQ to bridge this research gap for a better memory-accuracy balance. Specifically, we observe that the weight distributions of LLMs vary considerably from layer to layer, resulting in different tolerances to quantization errors. Motivated by this, we derive a novel quantization optimisation formulation to solve for the layer-wise quantization parameters, while enforcing the overall quantization memory consumption budget into the constraints. The new formulation can be efficiently solved by converting to a mixed integer programming problem. Experiments shows that our method can achieve the 1% accuracy loss goal with additional bit budget or further reduce memory usage on Llama models. This unlocks a wide range of quantization options and simplifies memory-accuracy trade-off.

Type

Publication

In 17th International Conference on Agents and Artificial Intelligence

This site hosts an HTML version of the original paper which was converted using

pdf2htmlex. We recommend you read

the HTML version of the paper by clicking VIEW

PAPER as it loads faster and replicates identical

typography quality as its PDF counterpart. If you wish to stick with the PDF

version, click the

PDF link above.

Click SLIDES to view the companion oval

presentation slides.